Innovative HP machine may start to compute by end of 2016.

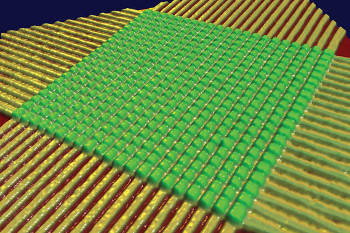

Overlapping nanowires, with the memristor elements placed at the intersections. Integrated-circuit design is currently based on three fundamental elements: the resistor, the capacitor, and the inductor. A fourth element was described and named in 1971 by Leon Chua, a professor at the University of California, Berkeley’s Electrical Engineering and Computer Sciences Department, but researchers at HP Labs didn’t prove its existence until April 2008. This fourth element—the memristor (short for memory resistor)—has properties that cannot be reproduced through any combination of the other three elements.When Hewlett Packard first unveiled plans to develop an entirely new computer structure at its Discover user conference in June, it outlined a development which potentially could deliver a much simpler and faster system than is currently available. This was echoed in a speech given by HP Labs Director Martin Fink at the Software Freedom Law Centre’s 10th anniversary conference in New York.

Overlapping nanowires, with the memristor elements placed at the intersections. Integrated-circuit design is currently based on three fundamental elements: the resistor, the capacitor, and the inductor. A fourth element was described and named in 1971 by Leon Chua, a professor at the University of California, Berkeley’s Electrical Engineering and Computer Sciences Department, but researchers at HP Labs didn’t prove its existence until April 2008. This fourth element—the memristor (short for memory resistor)—has properties that cannot be reproduced through any combination of the other three elements.When Hewlett Packard first unveiled plans to develop an entirely new computer structure at its Discover user conference in June, it outlined a development which potentially could deliver a much simpler and faster system than is currently available. This was echoed in a speech given by HP Labs Director Martin Fink at the Software Freedom Law Centre’s 10th anniversary conference in New York.

The company stated that it anticipated being able to offer commercially available computers based on the design within 10 years. However it now appears that HP’s lab expects to have the first prototype produced as early as the end of 2016. Fink commented that this would allow the company a few extra years to iron out any bugs. He also said that if it proves successful then everyone in the IT business, from computer scientists to system administrators, may have to rethink their jobs.

HP’s new concept rethinks the Von Neumann computer architecture that has been dominant since the dawn of computing, which involves a computer with a processor, working memory and storage. In order to run a program, the processor loads the instructions and data from the storage into memory to perform the operations, then copies the results back to disk for permanent storage, if necessary.

Due to the fact that manufacturing techniques for producing today’s working memory — RAM — are reaching their limits, the industry knows it will have to move to another form of memory. Various experimental designs for next-generation memory are currently being developed and HP is working on its own version, called memristor, which the Machine is based on.

The new memory designs share the common characteristic of being persistent, so if they lose power, they can retain their contents, unlike today’s RAM. What this means is that they can take the place of traditional storage mechanisms, such as hard drives or solid-state disks. The result, computers can operate directly on the data itself, on the memristors in HP’s case, so there would be no need to continually move the data between the working memory and storage.

Seems simple enough but there are huge ramifications!

Fink explained that such a machine would require an entirely new operating system. Most of the work an OS involves copying data back and forth between memory and disk. The Machine eliminates the notion of data reads or writes. It also means that in theory, computers could be much more powerful than our current ones.

Another advantage that the Machine architecture will offer is simplicity, added Fink. Today, the average system may have between nine and 11 layers of data storage, from the super-fast L1 caches to the slow disk drives. Each layer is a trade-off between persistence and speed. This hierarchy leads to a lot of design complexity, most all of which can be eliminated by the flatter design of using a single, persistent, fast memory.

“Our goal with the machine is to eliminate the hierarchy,” Fink said.

You can Tweet, Like us on Facebook, Share, Google+, Pinit, print and email from the top of this article.

Copyright © 2014, DPNLIVE – All Rights Reserved.